There’s a shift happening in how organisations think about AI. Until recently, most systems were reactive, waiting for a user prompt, producing static outputs, and were limited to one task at a time. That’s changing. Agentic AI is emerging as the next step. Instead of responding to commands, these systems can make decisions, take action, and adjust as needed. They’re not just tools anymore. They’re more like collaborators who can work toward a goal.

At Sirocco, we work with organisations that are trying to make that shift in a responsible and structured way. We’re seeing this unfold not only in technical teams but across service, operations, marketing, and field functions. And as a Salesforce partner, we’re aligned with their view of AI that’s safe, contextual, and trustworthy from the start. Salesforce recently published a glossary of agentic AI terms. We’ve taken that list and expanded it for our audience – leaders like you who need practical understanding, not just definitions. We’ve grouped these terms into four categories: what agentic AI is, how it functions, how to make it useful, and how to keep it safe. This list may not make you an AI expert, but it will help you ask better questions, frame stronger discussions, and make decisions with more clarity.

What Agentic AI actually means

Agentic AI marks a shift from passive tools to goal-oriented systems. These aren’t just helpful assistants. They can analyse context, make decisions, and carry out tasks independently.

Agentic AI

Systems that can take initiative, reason through complex tasks, and work toward defined outcomes. They operate beyond simple instructions, adjusting their approach as needed.

AI Agent

An individual system capable of acting on behalf of a user or process. Agents can interpret a goal and execute the steps required to achieve it without ongoing intervention.

Goal-Directed Behaviour

This is the core of agency. Rather than waiting for each instruction, an agent understands the intended result and acts in ways that move toward that outcome.

Autonomy

The ability to operate independently within set boundaries. Autonomy means the agent decides how to complete a task, not just when or in what order.

Planning

Agents don’t just react—they plan. They break larger goals into manageable steps, identify the tools or data needed, and execute tasks in the right sequence.

Multi-Step Reasoning

An agent can make decisions at multiple points in a process, adjusting its actions based on outcomes, failures, or new inputs. It’s intelligent and iterative.

Uncertainty Handling

Agents often face incomplete or ambiguous inputs. Rather than forcing a decision, they pause, ask clarifying questions, or wait for more data—minimising unnecessary risks.

Simulation

Before committing to a course of action, agents can simulate different paths internally. This allows them to test assumptions, spot likely failures, and choose the most promising route forward.

What makes it work

Agentic systems are ‘smart’. They’re designed to operate in context, use resources efficiently, and adapt in real-time.

Context Awareness

The agent understands where it is and what’s happening. That includes user history, business logic, and system state. It acts based on relevance, not just rules.

Memory

Memory allows the agent to keep track of information during a task and, in some cases, remember past interactions to improve future performance.

Tool Use

Agents don’t operate in isolation. They use APIs, scripts, and enterprise systems to carry out tasks. This makes them more flexible and more embedded in real workflows.

Retrieval-Augmented Action

To improve decisions, agents can retrieve external information in real time—like pulling from a knowledge base, live system state, or customer records—before acting. It’s what connects reasoning to reality.

Orchestration

When tasks span multiple steps, systems, or users, the agent coordinates them. Orchestration allows agents to manage full workflows, not just isolated actions.

Delegation

An agent doesn’t need to do everything itself. It can route tasks to other agents, services, or people when that’s more effective.

Cross-Agent Collaboration

Multiple agents can share responsibilities across a process—one handling research, another performing actions, and another managing outcomes. This makes it easier to scale and manage complexity.

Real-Time Adaptation

Agents aren’t locked into a script. If something changes—like a new input, system failure, or updated request—they adjust their actions on the fly.

What makes it useful

For agentic AI to be valuable, it has to be explainable, relevant, and aligned with business outcomes. These terms cover how that’s achieved.

Personalisation

Agentic systems adapt to individuals. That could mean using a preferred tone, prioritising certain workflows, or skipping unnecessary steps based on history.

Feedback Loops

Agents improve when outcomes are tracked. Feedback—whether from humans or system signals—helps refine future actions and decisions.

Chain-of-Thought

Rather than giving an answer without context, the agent shows its reasoning, offering a clear path from input to output. This builds trust.

Prompt Engineering

Even agentic systems rely on how they’re asked to do things. Clear, outcome-oriented prompts lead to better task execution and fewer missteps.

Grounding

This keeps the agent anchored to reliable data. Rather than generating output from scratch, a grounded agent references trusted sources like a CRM, policy database, or knowledge base.

Outcome Optimisation

Agents don’t just complete tasks—they evaluate how well the outcome meets the goal. This could include balancing accuracy, speed, cost, or compliance needs.

Goal Evaluation

As part of staying aligned, agents assess whether their actions are actually moving toward the intended result. If not, they can revise the plan, escalate, or stop.

Model Monitoring

Agentic systems require oversight. Monitoring tracks accuracy, drift, and performance changes—ensuring agents stay aligned and effective over time.

What keeps it safe

As agents take on more responsibility, organisations need ways to maintain control, uphold standards, and protect trust.

Human-in-the-Loop

Humans remain involved at key points. In high-risk or regulated contexts, agents flag decisions for approval rather than acting alone.

Guardrails

These are built-in constraints—permissions, data boundaries, ethical limits—that prevent the agent from acting out of scope.

Explainability

If an agent makes a decision, there needs to be a record and a reason. Explainability supports audits, reviews, and human trust.

Safety and Alignment

Agents must reflect company values, comply with regulation, and operate within operational objectives—not just what’s technically possible.

Trusted Agentic AI

A framework from Salesforce focused on five principles: safety, accuracy, honesty, empowerment, and sustainability. It’s a blueprint for building agents that people can actually rely on.

Delegated Accountability

As agents take on more work, it becomes critical to clarify responsibility. Whether it’s a designer, operator, or system—someone has to be answerable.

Why this matters

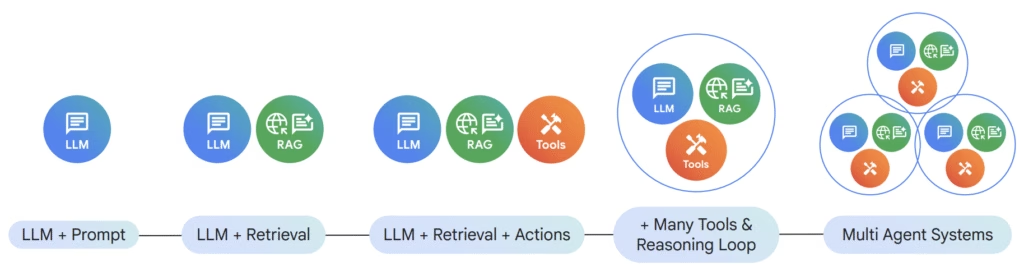

You don’t need to memorise every term here. But you should be familiar with what they point to. Agentic AI has already been moving from concept to capability for months. Whether it’s surfacing insights, coordinating workflows, or driving customer actions, agents are already showing up in the way people work. We’re nearing the point of Multi-Agent Systems in the AI evolution overview below (courtesy of Google Cloud).

At Sirocco, we work with teams ready to build practical, responsible AI strategies. We focus on the foundations: people, process, and technology so agentic AI isn’t just a trend, but a tool that fits your business. If this is on your radar, or even already on your roadmap, we’re here to help make it real. If you want to learn more about the pricing structure of popular agentic AI solutions, check our post Demystifying Agentic AI pricing: What to consider when evaluating different pricing models. Reach out today to start a conversation with our experts: